Recently, in a packed room of over 100 professionals, Nuzha Yakoob shared with the hushed crowd how nature is the greatest innovator. Ms. Yakoob showcased Festo Robotics’ bionic zoo from elephant nose-inspired end-effectors to grippers modeled after chameleon tongues. My personal favorite are the robotic ants that collaborate over the cloud to accomplish specific jobs that would be impossible to do alone. As I left #RobotLabNYC, I mused that biology holds the keys to unlocking the greatest challenges in computing and robotics.

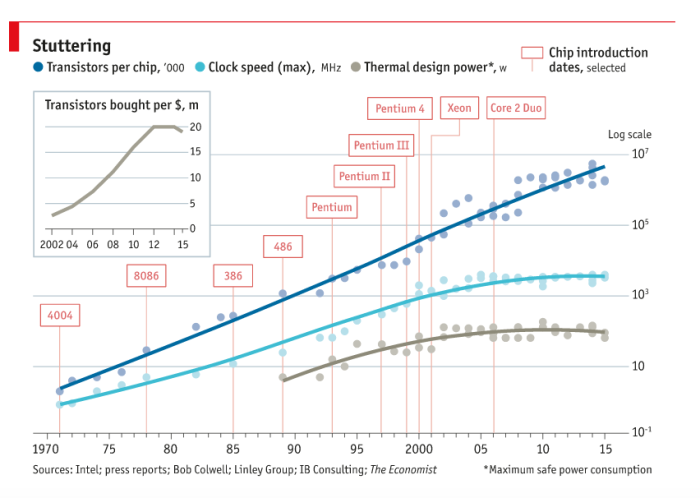

Since 1965, Moore’s Law has been the pillar of the modern computing age, which has also led to the greatest growth of robotics. However, many are predicting we are reaching the tipping point of the number of silicon wafers that can be stacked on a single chip. The law is named after Gordon Moore, former CEO of Intel, who observed more than 50 years ago that transistors were shrinking so quickly that every year twice as many could fit onto a single chip, leading to exponential growth of processing power. Moore’s Law was later adjusted to doubling every 18 months (At RobotLabNYC David Rose spoke about exponential growth as driver and disrupter of everything in today’s connected world). However, many are observing a slowing of processing power between chip generations indicating that we could be years away from the end of Moore’s Law.

“It’s time to start planning for the end of Moore’s Law, and that it’s worth pondering how it will end, not just when,” says Robert Colwell, former director of the Microsystems Technology Office at the Defense Advanced Research Projects Agency (DARPA).

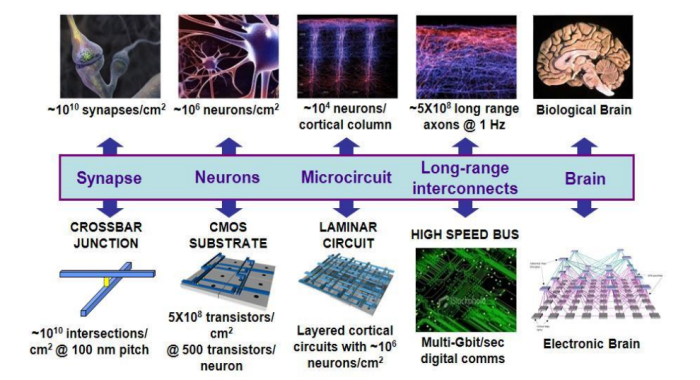

Semiconductors are in everything today, but if artificial intelligence is the future silicon may not be the most energy efficient means for compute power. Many designers are taking Ms. Yakoob’s approach by looking at biology or the brain as a model of future networks. The best known example is a DARPA-funded program, called SyNAPSE (Systems of Neuromorphic Adaptive Plastic Scalable Electronics), that is developing a neuromorphic machine technology that scales to biological levels. More simply stated, it is an attempt to build a new kind of computer with similar form and function to a mammal’s brain. The ultimate aim is to build an electronic microprocessor system that matches a brain in function, size, and power consumption. It could recreate 10 billion neurons, 100 trillion synapses, consume one kilowatt (same as a small electric heater), and occupy less than two liters of space.

Neuromorphic computing was originally conceived by Caltech Professor Carver Mead. In his 1990 IEEE paper, Mead wrote that “large-scale adaptive analog systems are more robust to component degradation and failure than are more conventional systems, and they use far less power. For this reason, adaptive analog technology can be expected to utilize the full potential of wafer-scale silicon fabrication.” In translation, the idea is to utilize analog circuits to mimic neuro-biological architectures that we find in our nervous system:

Researchers at Stanford University and Sandia National Laboratories announced last month a different approach to mimicking a mammal’s brain by creating an artificial synapse. The artificial synapse, reported first in Nature Materials, mimics the way a brian’s synapses learn through crossing signals. This means that actual processing of information creates energy, not the other way around by consuming energy to compute. Artificial synapses could provide huge energy savings over traditional computing, especially for deep learning applications.

According to Alberto Salleo, co-author of the paper, “it works like a real synapse but it’s an organic electronic device that can be engineered. It’s an entirely new family of devices because this type of architecture has not been shown before. For many key metrics, it also performs better than anything that’s been done before with inorganics.”

This synapse may one day be part of a more brain-like computer, which could be especially beneficial for computing for voice-controlled interfaces like Alexa and autonomous cars. Past efforts in this field have produced high-performance neural networks supported by artificially intelligent algorithms but these are still distant imitators of the brain that depend on energy-consuming computer hardware.

“Deep learning algorithms are very powerful but they rely on processors to calculate and simulate the electrical states and store them somewhere else, which is inefficient in terms of energy and time. Instead of simulating a neural network, our work is trying to make a neural network,” said Alberto’s co-author, Yoeri van de Burgt.

The artificial synapse is structured out of inexpensive organic materials, composed of hydrogen and carbon similar to a brain’s chemistry. The voltages applied to train the artificial synapse are also the same as those that move through human neurons. According to researchers, processing recognition has been in the upper ninetieth percentile.

The idea of using natural materials to run fuel cells is expanding into robotics with new novel microbial components. Researchers at the University of Rochester have taken a century old process on its heels by using bacteria to generate an electrical current to power robots via a microbial fuel cells (or MFCs). The researchers plan to use MFCs in wastewater to consume bacteria as a way to power new types of devices.

The new MFC uses a novel approach leveraging carbon as conductor of electricity. Until Lamberg’s discovery, most MFCs consisted of metal components or carbon felt that easily corrodes. His solution was to replace the metal parts with with paper coated with carbon paste, which is a simple mixture of graphite and mineral oil. The carbon paste-paper electrode is not only cost-effective and easy to prepare; it also outperforms traditional materials.

Jonathan Rossiter, Professor of Robotics at the University of Bristol, has been utilizing the MFC created by the University of Rochester in robots to clean polluted waterways. Rossiter’s Row-bot feeds on the bacteria found in dirty water and uses it for propulsion. Row-bot is still in its conceptual stage, but the University of Bristol plans to develop swarms of autonomous water robots that operate indefinitely in “remote unstructured locations by scavenging its energy from the environment.”

“The work shows a crucial step in the development of autonomous robots capable of long-term self-power. Most robots require re-charging or refueling, often requiring human involvement,” exclaims Rossiter.

“We anticipate that the Row-bot will be used in environmental clean-up operations of contaminants, such as oil spills and harmful algal bloom, and in long term autonomous environmental monitoring of hazardous environments, for example those hit by natural and man-made disasters,” added co-researcher, Hemma Philamore.

As we enter the new age of computing the rules have yet to be written. The lines between organic and inorganic matter are blurring. At a certain point in time, it could be very possible that biologic-inspired machines become a species unto themselves.