The star of the Super Bowl wasn’t on the field, but the robot in Michelob ULTRA commercial. The metal beast was featured living and playing among humans, taking any opportunity to show off his brute strength. The bot smugly proves he do anything better than his organic citizens, except down a cold one. In the closing caption, “It’s only worth it, if you can enjoy it.” Unlike previous dystopian attempts of illustrating the power of robots and artificial intelligence, the creators of the advertisement humorously embrace cyborg self-awareness. In explaining the hurdle displayed in science fiction, Professor Hod Lipson of Columbia University’s Creative Machine Lab said in a 2015 interview, “Self-awareness is, I think, the ultimate AI challenge. We’re all working on it…if you look at the end game for AI, what’s the ultimate thing? It is to create—I think—a sort of self-awareness. It’s almost a philosophical thing—like we’re alchemists trying to recreate life—but ultimately I think, at least for many people, it’s the ultimate form of intelligence.”

This week Dr. Lipson’s research came a step closer to actually powering up “the ultimate form of intelligence.” In a ScienceDaily article, Lipson’s lab unveiled the first robotic platform with inherent self-learning capabilities. The Columbia team set up an experiment with a robotic arm that was devoid of any knowledge about its size, mobility or capabilities. “Initially the robot does not know if it is a spider, a snake, an arm — it has no clue what its shape is,” states the study. To unlock the mystery of existence, the machine begins a process of self-discovery by methodically capturing thousands of images as it moves about its space. The output is a point cloud that inputs critical data into the network about its boundaries and limitations to successfully navigate its habitat. The process of this intense computer training was whimsically labeled by the Creative Machine Lab as “babbling,” which in this case took three days to go from dumb metal to smart machine. After the 72-hour period, the robot remarkably performed “pick-and-place” operations with great ease. According to the data, the open-loop system generated a success rate of 44%, which is not bad for a self-starter with only three days on the job. The study’s lead author, Robert Kwiatkowski, compared his early work to “trying to pick up a glass of water with your eyes closed, a process difficult even for humans.”

The Columbia team continued to test the AI through novel experiments, including replacing healthy parts with damaged ones so the robotic arm grasps how to compensate for its deformity. Dr. Lipson claims that such self-repairing machines could independently adapt themselves to unforeseen circumstances by their creators to truly become self-thinking entities. In his words, “If we want robots to become independent, to adapt quickly to scenarios unforeseen by their creators, then it’s essential that they learn to simulate themselves.” This groundbreaking discovery has the potential to immediately chip away at the $3 billion robotic system integration market, whereby machines could go to work day one powering up to meet any task by their own intuition. Yet, Dr. Lipson sees an even bigger picture for his research by pushing the envelope of artificial intelligence from today’s narrow applications into General AI. Narrow AI is programmed for targeted use cases, such as today’s examples of self-driving cars and industrial robots, which lack the versatility of General AI that is akin to organisms. “We conjecture that this advantage may have also been the evolutionary origin of self-awareness in humans. While our robot’s ability to imagine itself is still crude compared to humans, we believe that this ability is on the path to machine self-awareness,” describes Dr. Lipson. Realizing that the premise of General AI has the potential to open up a Pandora’s box of ethical considerations, the team is proceeding cautiously, “Self-awareness will lead to more resilient and adaptive systems, but also implies some loss of control. It’s a powerful technology, but it should be handled with care.”

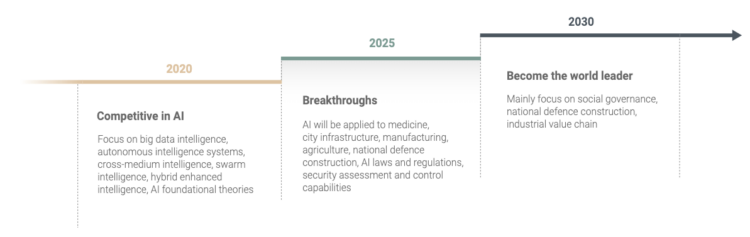

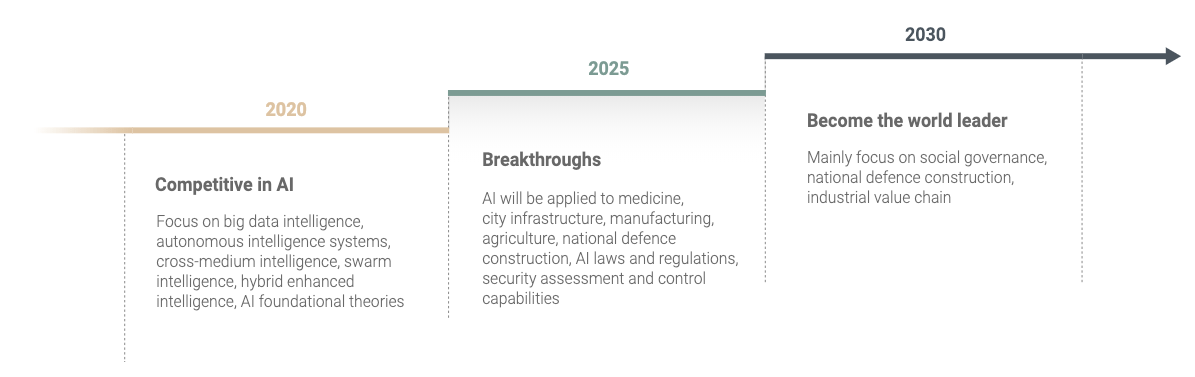

The chances for nefarious use cases of intelligent systems is statistically going up with the surging number of new entries into the field over the past five years. The World Intellectual Property Organization (WIPO) states that 50% of all the 170,000 patents filed since 2013 have been in the field of AI. According to the UN report, the majority of deep learning applications are from the United States and China. “Chinese organizations make up 17 of the top 20 academic players in AI patenting, as well as 10 of the top 20 in AI-related scientific publications,” says WIPO Director General Francis Gurry. This is part of Beijing’s “Made in China 2025” plan to become the global AI leader by 2030. Already close to half of all equity funding for AI and robotic startups comes from Chinese capital institutions. The downside of the Asian superpower’s activity is its public embrace of employing such knowledge to spy on its own citizens.

According to a recent article in The New York Times, “China has become the world’s biggest market for security and surveillance technology, with analysts estimating the country will have almost 300 million cameras installed by 2020.” The Times states that “Government contracts are fueling research and development into technologies that track faces, clothing and even a person’s gait. Experimental gadgets, like facial-recognition glasses, have begun to appear.” The news of such use cases has prompted WIPO Director Gurry to stress the need for UN member states to work on developing a legal and ethical framework for AI, especially since General AI is now on the horizon. As Director Gurry elaborates, “Is it (AI) good news or bad news? Well, I would tend to say that all technology is somewhat neutral, and it depends on what you do with it.”