Healthy humans take for granted their five senses. In order to mold metal into perceiving machines, it requires a significant amount of engineers and capital. Already, we have handed over many of our faculties to embedded devices in our cars, homes, workplaces, hospitals, and governments. Even automation skeptics unwillingly trust the smart gadgets in their pockets with their lives.

Last week, General Motors stepped up its autonomous car effort by augmenting its artificial intelligence unit, Cruise Automation, with greater perception capabilities through the acquisition of LIDAR (Light Imaging, Detection, And Ranging) technology company Strobe. Cruise was purchased with great fanfare last year by GM for a billion dollars. Strobe’s unique value proposition is shrinking its optical arrays to the size of microchip, thereby substantially reducing costs of a traditionally expensive sensor that is critical for autonomous vehicles measuring the distances of objects on the road. Cruise CEO Kyle Vogt wrote last week on Medium that “Strobe’s new chip-scale LIDAR technology will significantly enhance the capabilities of our self-driving cars. But perhaps more importantly, by collapsing the entire sensor down to a single chip, we’ll reduce the cost of each LIDAR on our self-driving cars by 99%.”

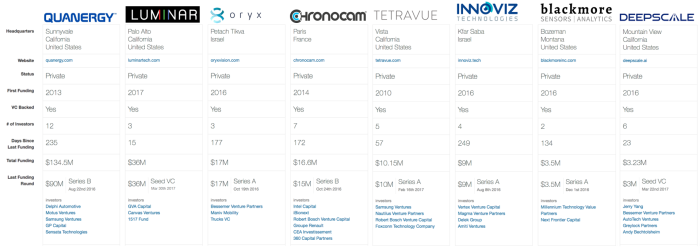

GM is not the first Detroit automaker aiming to reduce the costs of sensors on the road; last year Ford invested $150 million in Velodyne, the leading LIDAR company on the market. Velodyne is best known for its rotation sensor that is often mistaken for a siren on top of the car. In describing the transaction, Raj Nair, Ford’s Executive Vice President, Product Development and Chief Technical Officer, said “From the very beginning of our autonomous vehicle program, we saw LIDAR as a key enabler due to its sensing capabilities and how it complements radar and cameras. Ford has a long-standing relationship with Velodyne and our investment is a clear sign of our commitment to making autonomous vehicles available for consumers around the world.” As the race heats up for competing perception technologies, LIDAR startups is already a crowded field with eight other companies (below) competing to become the standard vision for autonomous driving.

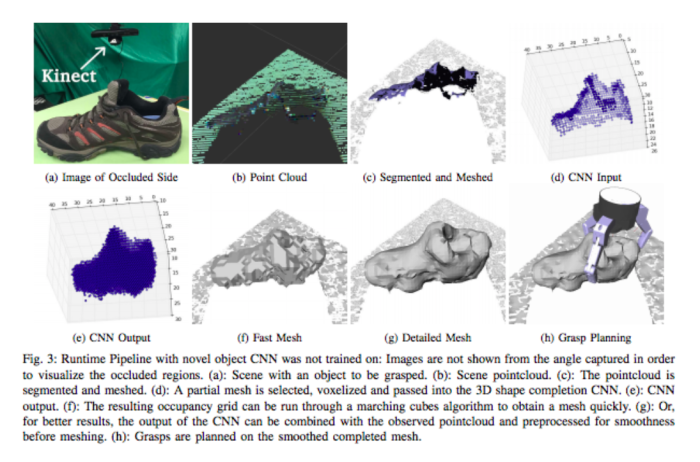

Walking the halls of Columbia University’s engineering school last week, I visited a number of the robotic labs working on the next generation of sensing technology. Dr. Peter Allen, Professor of Computer Science, is the founder of the Columbia Grasp Database, whimsically called GraspIt!, that enables robots to better recognize and pickup everyday objects. GraspIt! provides “an architecture to enable robotic grasp planning via shape completion.” The open source GraspIt! database has over 440,000 3D representations of household articles from varying viewpoints, which makes up its “3D convolutional neural network (CNN).” According to the Lab’s IEEE paper published earlier this year, the CNN is able to serve up “a 2.5D pointcloud” capture of “a single point of view” of each item, which then “fills in the occluded regions of the scene, allowing grasps to be planned and executed on the completed object” (see diagram below). As Dr. Allen demonstrated last week, the CNN is able to perform as successfully in live scenarios with a robots “seeing” an object for the first time, as it does in computer simulations.

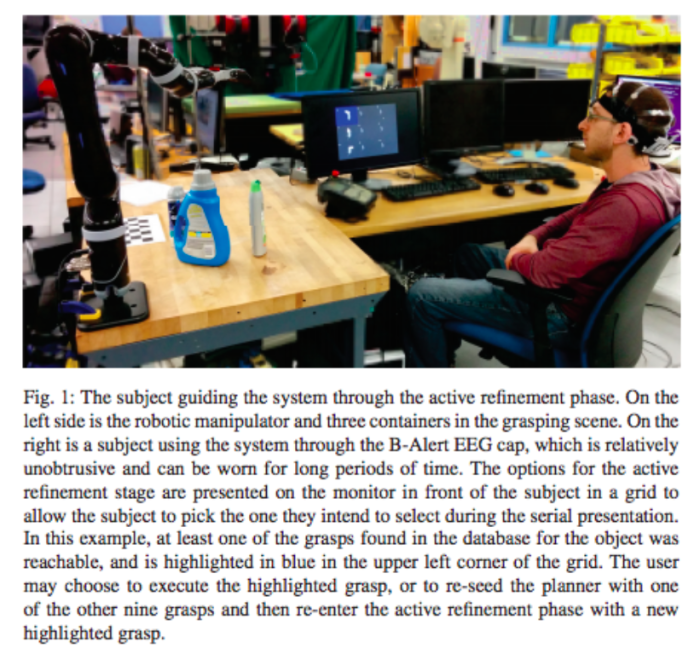

Taking a novel approach in utilizing their cloud-based data platform, Allen’s team now aims to help quadriplegics better navigate their world with assistive robots. Typically, a quadriplegic is reliant on human aids to perform even the most basic functions like eating and drinking, however Brain-Computer Interfaces (BCI) offer the promise of independence with a robot. Wearing a BCI helmet, Dr. Allen’s grad student was able to move a robot around the room by just looking at objects on screen. The object on the screen triggers electroencephalogram (EGG) waves that are admitted to the robot which translates the signals into pointcloud images on the database. According to their research, “Noninvasive BCI’s, which are very desirable from a medical and therapeutic perspective, are only able to deliver noisy, low-bandwidth signals, making their use in complex tasks difficult. To this end, we present a shared control online grasp planning framework using an advanced EEG-based interface…This online planning framework allows the user to direct the planner towards grasps that reflect their intent for using the grasped object by successively selecting grasps that approach the desired approach direction of the hand. The planner divides the grasping task into phases, and generates images that reflect the choices that the planner can make at each phase. The EEG interface is used to recognize the user’s preference among a set of options presented by the planner.”

While technologies like LIDAR and GraspIt! enable robots to better perceive the human world, in the basement of the SEAS Engineering Building at Columbia University, Dr. Matei Ciocarlie is developing an array of affordable tactile sensors for machines to touch & feel their environments. Humans have very complex multi-modal systems that are built through trial & error knowledge gained since birth. Dr. Ciocarlie ultimately aims to build a robotic gripper that has the capability of a human hand. Using light signals, Dr. Ciocarlie has demonstrated “sub-millimeter contact localization accuracy” of the mass object to determine the force applied to picking it up. At Columbia’s Robotic Manipulation and Mobility Lab (ROAM), Ciocarlie is tackling “one of the key challenges in robotic manipulation” by figuring out how “you reduce the complexity of the problem without losing versatility.” While demonstrating a variety of new grippers and force sensors which are being deployed in such hostile environments as the International Space Station and a human’s cluttered home, the most immediately promising innovation is Ciocarlie’s therapeutic robotic hand.

According to Ciocarlie’s paper: “Fully wearable hand rehabilitation and assistive devices could extend training and improve quality of life for patients affected by hand impairments. However, such devices must deliver meaningful manipulation capabilities in a small and lightweight package… In experiments with stroke survivors, we measured the force levels needed to overcome various levels of spasticity and open the hand for grasping using the first of these configurations, and qualitatively demonstrated the ability to execute fingertip grasps using the second. Our results support the feasibility of developing future wearable devices able to assist a range of manipulation tasks.”

Across the ocean, Dr. Hossam Haick of Technion-Israel Institute of Technology has built an intelligent olfactory system that can diagnosis cancer. Dr. Haick explains, “My college roommate had leukemia, and it made me want to see whether a sensor could be used for treatment. But then I realized early diagnosis could be as important as treatment itself.” Using an array of sensors composed of “gold nanoparticles or carbon nanotube” patients breath into a tube that detects cancer biomarkers through smell. “We send all the signals to a computer, and it will translate the odor into a signature that connects it to the disease we exposed to it,” says Dr. Haick. Last December, Haick’s AI reported an 86% accuracy in predicting cancers in more than 1,400 subjects in 17 countries. The accuracy increased with use of its neural network in specific disease cases. Haick’s machine could one day have better olfactory senses than canines, which have been proven to be able sniff out cancer.

When writing this post on robotic senses, I had several conversations with Alexa and I am always impressed with her auditory processing skills. It seems that the only area in which humans will exceed robots will be taste; however I am reminded of Dr. Hod Lipson’s food printer. As I watched Lipson’s concept video of the machine squirting, layering, pasting and even cooking something that resembled Willie Wonka’s “Everlasting Gobstopper,” I sat back in his Creative Machines Lab realizing that Sci-Fi is no longer fiction.