In 1985, a twenty-two year old Garry Kasparov became the youngest World Chess Champion. Twelve years later, he was defeated by the only player capable of challenging the grandmaster, IBM’s Deep Blue. That same year, RoboCup was formed to take on the world’s most popular game, soccer, with robots. Twenty years later, we are on the threshold of the accomplishing the biggest feat in machine intelligence, a team of fully autonomous humanoids beating human players at FIFA World Cup soccer.

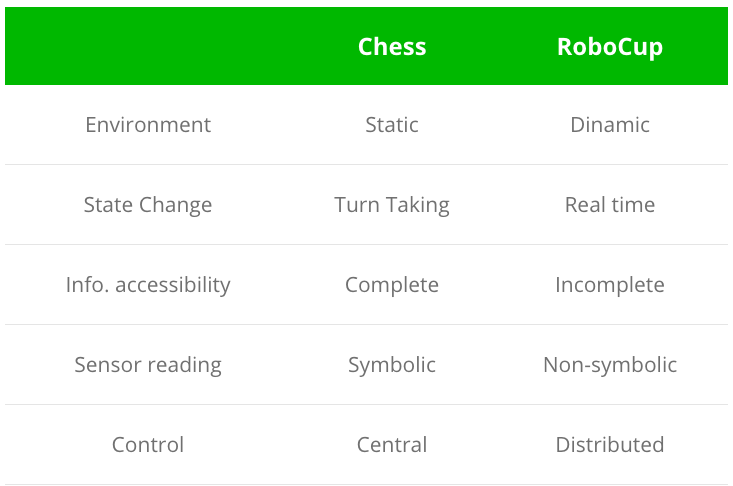

Many of the advances that have led to the influx of modern autonomous vehicles and machine intelligence are the result of decades of competitions. While Deep Blue and Alpha Go have beat the world’s best players at board games, soccer requires real-world complexities (see chart) in order to best humans on the field. This requires RoboCup teams to combine a number of mechatronic technologies within a humanoid device, such as real-time sensor fusion, reactive behavior, strategy acquisition, deep learning, real-time planning, multi-agent systems, context recognition, vision, strategic decision-making, motor control, and intelligent robot control.

Professor Daniel Lee of University of Pennsylvania’s GRASP Lab described the RoboCup challenges best, “Why is it that we have machines that can beat us in chess or Jeopardy but we can beat them in soccer? What makes it so difficult to embody intelligence into the physical world?” Lee explains, “It’s not just the soccer domain. It’s really thinking about artificial intelligence, robotics, and what they can do in a more general context.”

RoboCup has become so important that the challenge of soccer has now expanded into new leagues that focus on many commercial endeavors from social robotics to search & rescue to industrial applications. These leagues have a number of subcategories of competition with varying degrees of difficulty. In less than two months, international teams will convene in Nagoya, Japan for the twenty-first games. As a preview of what to expect, let’s review some of last year’s winners, and just maybe, it could give us a peek of the future of automation.

RoboCup Soccer

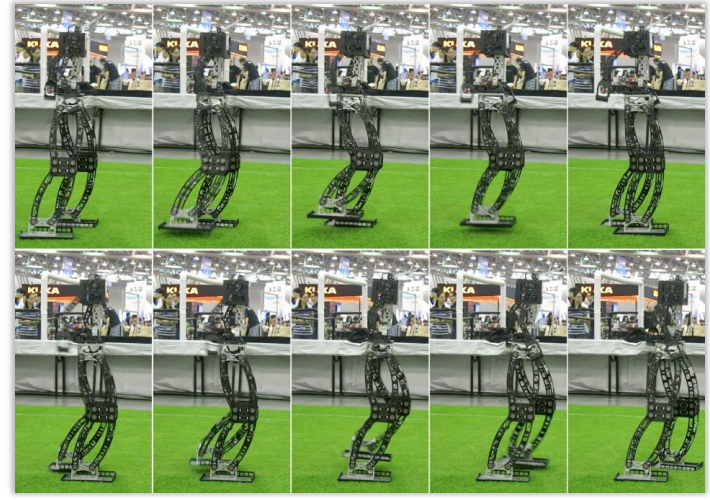

While Iran’s human soccer team is 28th in the World, their robot counterparts (Baset Pazhuh Tehran) won 1st place in the AdultSize Humanoid competition. Baset’s secret sauce is its proprietary algorithms for motion control, perception, and path planning. According to Baset’s team description paper the key was building a “a fast and stable walk engine” based upon the success of past competitions. This walk engine was able to combine “all actuators’ data in each joint, and changing the inverse and forward kinematics” to “avoid external forces affecting robot’s stability, this feature plays an important role to keep the robot standing when colliding to the other robots or obstacles.” Another big factor was their goalkeeper that used a stereo vision sensor to detect incoming plays and win the competition by having “better percepts of goal poles, obstacles, and the opponent’s goal keeper. To locate each object in real self-coordinating system.” The team is part of a larger Iranian corporation, Baset, that could deploy this perception in the field. Baset’s oil and gas clients could benefit from better localization techniques and object recognition for pipeline inspections and autonomous work vehicles. If by 2050 RoboCup’s humanoids will be capable of playing humans in soccer, one has to wonder if Baset’s mechanical players will spend their off season working in the Arabian peninsula?

RoboCup Rescue FAC

In 2001 the RoboCup organization added simulated rescue to the course challenge, paving the way for many life-saving innovations already being embraced by first responders. The course starts with a simulated earthquake environment whereby the robot performs a search and rescue mission lasting 20 minutes. The skills are graded by overcoming a number of obstacles that are designed to assess the robot’s autonomous operation, mobility, and object manipulation. Points are given by the number of victims found by the robot, details about the victims, and the quality of the area mapped. In 2016, students from the King Mongkut’s University of Technology North Bangkok won first place with their Invigorating Robot Activity Project (or iRAP).

Similar to Baset, iRap’s success is largely based upon solving problems from previous contests where they placed consistently in the top tier. The team had a total of four robots: one autonomous robot, two tele-operative robots, and one aerial drone. Each of the robots had multiple sensors related to providing critical data, such as CO2 levels, temperature, positioning, 2D mapping, images, and two-way communications. iRap’s devices managed to navigate with remarkable ease the test environment’s rough surfaces, hard terrains, rolling floor, stairs, and inclined floor. The most impressive performer was the caged quadcopter using enhanced sensors to localize itself within an outdoor search perimeter. According to the team’s description paper, “we have developed the autonomously outdoor robot that is the aerial robot. It can fly and localize itself by GPS sensor. Besides, the essential sensors for searching the victim.” It is interesting to note that the Thai team’s design was remarkably similar to Flyability’s Gimball that won first place in the UAE’s 2015 Drones for Good Competition. Like the RoboCup winner, the Gimball was designed specifically for search & rescue missions using a lightweight carbon fiber cage. As RoboCup contestants push the envelope of navigation mapping technologies, it is quite possible that the 2017 fleet could develop subterranean devices that could actively find victims within minutes of being buried by the earth.

RoboCup @Home

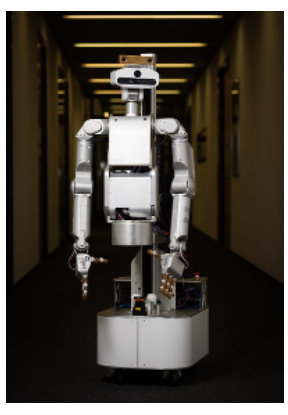

The home, like soccer, is one of the most chaotic conditions for robots to operate successfully. It is also one of the biggest areas of interest for consumers. Last year, RoboCup @Home celebrated its 10th anniversary by bestowing the top accolade to Team-Bielefeld (ToBI) of Germany. ToBi built a humanoid-like robot that was capable of learning new skills through natural language within unknown environments. According to the team’s paper, “the challenge is two-fold. On the one hand, we need to understand the communicative cues of humans and how they interpret robotic behavior. On the other hand, we need to provide technology that is able to perceive the environment, detect and recognize humans, navigate in changing environments, localize and manipulate objects, initiate and understand a spoken dialog and analyse the different scenes to gain a better understanding of the surrounding.” In order to achieve these ambitious objectives the team created a Cognitive Interaction Toolkit (CITK) to support an “aggregation of required system artifacts, an automated software build and deployment, as well as an automated testing environment.” Infused with its proprietary software the team’s primary robot, the Meka M1 Mobile Manipulator (below) demonstrated the latest developments in human-robot-interactions within the domestic setting. The team showcased how the Meka was able to open a previously shut doors, navigate safely around a person blocking its way, and recognize and grasp many household objects.

According to the team, “the robot skills proved to be very effective for designing determined tasks, including more script-like tasks, e.g. ’Follow-Me’ or ’Who-is-Who’, as well as more flexible tasks including planning and dialog aspects, e.g. ’General-PurposeService-Robot’ or ’Open-Challenge’.”

RoboCup @Work

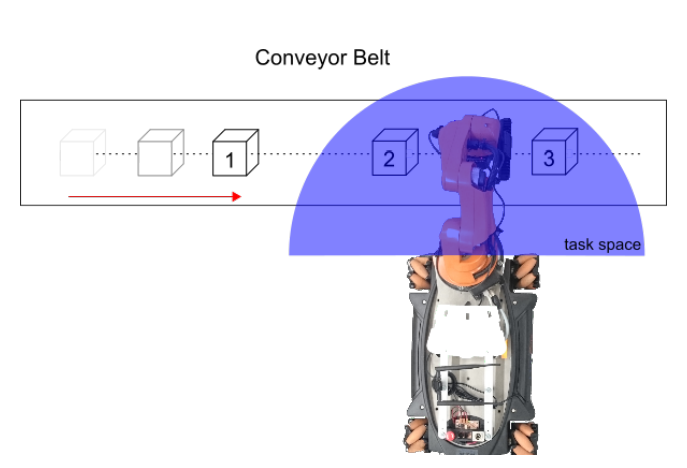

The @Work category debuted in 2016 with the Autonohm team from the University of Nürnberg winning first place. While Autonohm hardware was mostly off the shelf parts (a mobile robot KUKA youBot), the software utilized a number of proprietary algorithms. According to the paper, “in the RoboCup we use this software e.g. to grab objects using inverse kinematics, to optimize trajectories and to create fast and smooth movements with the manipulator. Besides the usability the main improvements are the graph based planning approach and the higher control frequency of the base and the manipulator.” The key to using this approach within a factory setting is its robust object recognition. The paper explains, “the robot measures the speed and position of the object. It calculates the point and time where the object reaches the task place. The arm moves above the calculated point. Waits for the object and accelerates until the arm is directly above the moving-object with the same speed. Overlapping the down movement with the current speed until gripping the object. The advantage of this approach is that while the calculated position and speed are correct every orientation and much higher objects can be gripped.”

Similar to other finalists, Autonohm’s object recognition software became the determining factor to its success. RoboCup’s goal of playing WorldCup soccer with robots may seem trivial, but its practice is anything but meaningless. In each category the advances developed on the field of competitive science are paying real dividends on a global scale across many industries.

In the words of the RoboCup mission statement: “The ultimate goal is to ‘develop a robot soccer team which beats the human world champion team.’ (A more modest goal is ‘to develop a robot soccer team which plays like human players.’) Needless to say, the accomplishment of the ultimate goal will take decades of effort. It is not feasible with current technologies to accomplish this in the near future. However, this goal can easily lead to a series of well-directed subgoals. Such an approach is common in any ambitious, or overly ambitious project. In the case of the American space program, the Mercury project and the Gemini project, which manned an orbital mission, were two precursors to the Apollo mission. The first subgoal to be accomplished in RoboCup is ‘to build real and software robot soccer teams which play reasonably well with modified rules.’ Even to accomplish this goal will undoubtedly generate technologies, which will impact a broad range of industries.”