Recently, I attended an “Artificial Intelligence (AI) Roundtable” of leading scientists, entrepreneurs and venture investors. As the discussion focused mainly on basic statistical techniques, I left unfulfilled. My friend, Matt Turck recently wrote that “just about every major tech company is working very actively on AI,” which also means that every startup hungry for capital is purchasing a dot ‘ai’ domain name. As the lines blur between what is and what really isn’t, I feel it necessary to provide readers with a quick lens of how to view intelligent agents for mechatronics.

For 65 years, The Turing Test remained unsolvable until a computer program called “Eugene Goostman” conquered it in 2014. The chatbot, which simulates a 13-year-old Ukrainian boy, did the unthinkable, fooling a group of human judges into thinking it was more real than a live person on the other side of the screen. Alan Turing’s original thesis in developing the schema was test the premise – “Can Machines Think?”

According to the competition’s organizer, Kevin Warwick of Coventry University, “The words Turing test have been applied to similar competitions around the world. However, this event involved the most simultaneous comparison tests than ever before, was independently verified and, crucially, the conversations were unrestricted. We are therefore proud to declare that Alan Turing’s test was passed for the first time.” Since then, many have debated whether the threshold was indeed crossed then, or before, or ever…

Last December, the Turing Test’s sound barrier (of sorts) was broken by a group of Researchers from MIT’s Computer Science and Artificial Intelligence Lab (CSAIL). Using a deep-learning algorithm, the AI fooled human viewers into thinking the images and corresponding sounds were indistinguishable. As a silent video clip played (above), the computer program produced sound that was realistic enough to fool even the most hardened audiophiles. According to the research paper, the authors envision their algorithms in the future being used to automatically produce movies, TV shows, as well as helping robots better understand their environments.

Lead author Andrew Owens said: “being able to predict sound is an important first step toward being able to predict the consequences of physical interactions with the world. A robot could look at a sidewalk and instinctively know that the cement is hard and the grass is soft, and therefore know what would happen if they stepped on either of them.”

Unpacking Owens’ statement, one uncovers a lot of theory of artificial intelligence. The algorithm is using a deep learning approach to automatically train the computer to match sounds to pictures through experience. According to the paper, the researchers spent several months recording close to 1,000 videos of approximately 46,000 sounds, each representing unique objects being “hit, scraped and prodded with a drumstick.”

Typically artificial intelligence starts with creating an agent to solve a specific program, often agents are symbolic or logical, but deep learning approaches like the MIT example uses sub-symbolic neural networks that attempt to emulate how the human brain learns. Autonomous devices, like robots, use machine learning approaches to combine algorithms with experiences.

AI is very complex and relies on theories derived from a multitude of disciplines, including: computer science, mathematics, psychology, linguistics, philosophy, neuroscience and statistics. For the purposes of this article, it may be best to group modern approaches into two categories: “supervised” and “unsupervised” learning. Supervised uses a method of “continuous target values” to mine data to predict outcomes, similar to how nominal variables are used to label values in statistics. In unsupervised learning there is no notion of a target value. Rather, algorithms perform operations based upon clustering data into classifications and then determine relationships between the inputs and outputs with numerical regression or other filtering methods. The primary difference between the two approaches is that in unsupervised learning the computer program is able to automatically connect and label patterns from streams of inputs. In the above example, the algorithm connects the silent video images to the library of drumstick sounds.

Now that the connections are made between patterns and inputs, the next step is to control behavior. The most rudimentary AI applications are divided between classifiers (“if shiny then silver”) and controllers (“if shiny then pick up”). It is important to note that controllers also classify conditions before performing actions. Classifiers use pattern matching to determine the closest match. In supervised learning, each pattern belongs to a certain predefined class. A data set is the labeling of classes and the observations received through experience. The more experience the increased number of classes and likewise data to input.

In robotics, unsupervised (machine) learning is required for object manipulation, navigation, localization, mapping and motion planning, which becomes increasingly more challenging in unstructured environments such as, enhanced manufacturing and autonomous driving. As a result, deep learning has given birth to many sub-specialities of AI, such as computer vision, speech recognition and natural language processing. Rodney Brooks (above) professed in 1986 a new theory of AI that led to the greatest advancement in machine intelligence for robotics. Brook’s “Subsumption Architecture” created a paradigm for real-time learning via live interaction through sensor inputs.

In his own words, Brooks said in a 2015 interview, “The work I did in the 80s on what I called subsumption architecture then led directly to the iRobot Roomba. And there are 14 million of them deployed worldwide. And it was also used in the iRobot PackBot, and there were 4,500 PackBots in Iraq and Afghanistan remediating roadside bombs – and by the way, there are some PackBots and Warriors from iRobot inside Fukushima now, using the subsumption architecture. There’s a variation of subsumption inside Baxter – we call it behaviour-based now, but its a descendent of that subsumption architecture. And that’s what lets Baxter be aware of different things in parallel, for instance it’s picked something up, it’s put it in a box, something goes wrong, and it drops the object, sadly. A traditional robot would just continue and sort of mime putting the thing in the box, but Baxter is aware of that, changes behaviour – that’s using the behaviour-based approach, which is a variation on subsumption. So it is part of Baxter’s intelligence.”

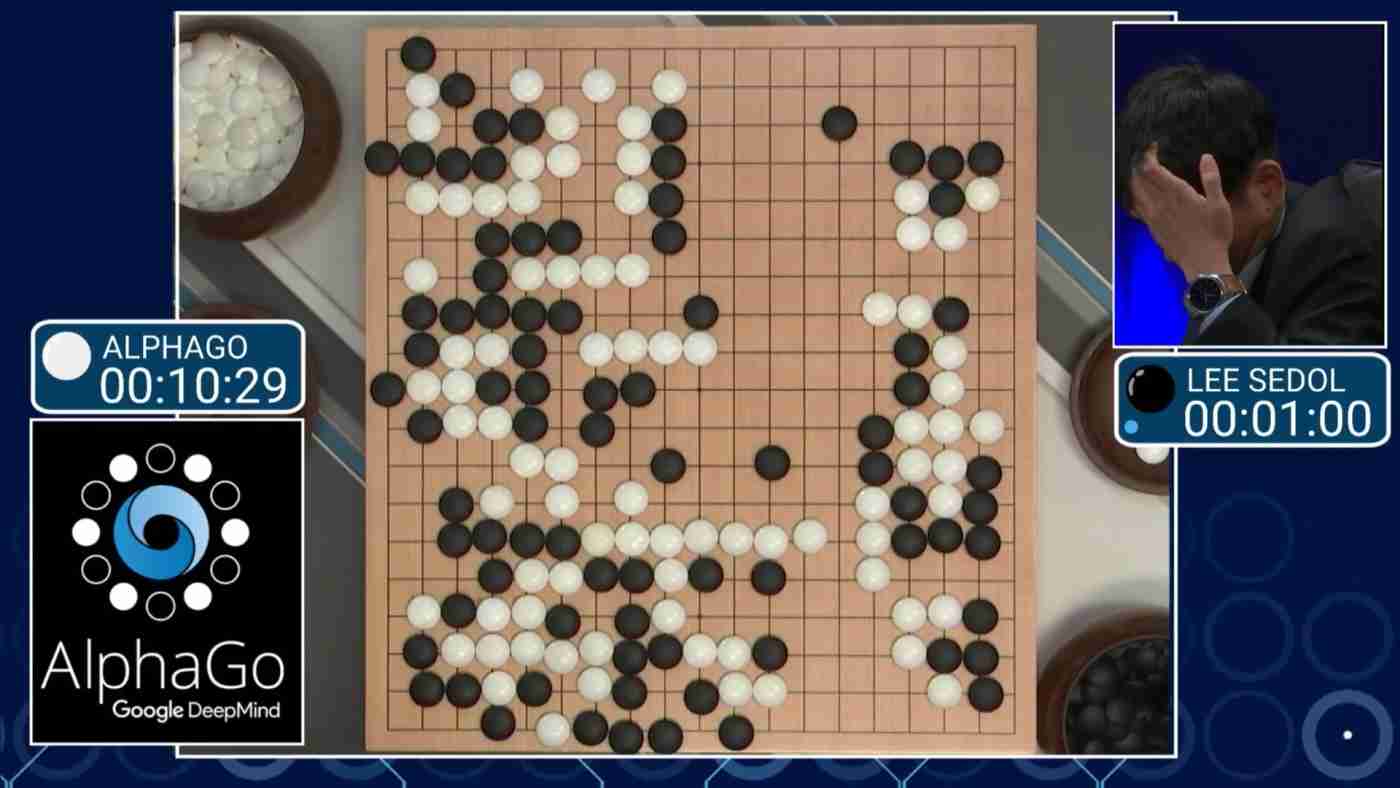

Many next generation computer scientists are focusing on designing not just the software that acts like the brain, but hardware that is designed like a human cranium. Today, infamous deep learning algorithms, like Siri and Google Translate, run on traditional computing processing platforms that consume a lot of energy as the logic and memory boards are separated. Last year, Google’s AlphaGo, the most successful deep learning program to date, was only able to beat the world-champion human player in Go after being trained on a database of thirty million moves running on approximately one million watts of power.

When asking Dr. Devanand Shenoy, formerly with the U.S. Department of Energy about Google’s project, he said: “AlphaGo had to be retrained for every new game (a feature of narrow AI where the machine does one thing very well, even better than humans). Once learning and training algorithms are implemented on neuromorphic hardware that are distributed, asynchronous, perhaps event driven, and fault-tolerant, the ability to process data with many orders of magnitude improvements in energy-efficiency as well as superhuman speed across multiple applications could be possible in the future. Recent trends in transfer learning with reservoir computing as an example, suggest that artificial network configurations may be trained to learn more than one application.”

Superhuman intelligence could sound super scary, however I am sobered by the predictions in the 1960s by the founder of artificial intelligence, Professor Herbert Simon, who stated then: “machines will be capable, within twenty years, of doing any work a man can do.” As this article only touches the surface of artificial intelligence, this subject matter will be further explored on June 13th in New York City at RobotLabNYC’s next event with Dr. Howard Morgan (FirstRound Capital) and Tom Ryden (MassRobotics). There are limited seats available, so be sure to RSVP today.