One of the most interesting discussions from this past week at RoboUniverse was Dr. Florian Röhrbein’s talk about his work in Neurorobotics as part of the Human Brain Project (see prototype below). Röhrbein has created a suite of robots including iCub, Roboboy, and a robotic mouse that maps the synapses of the rodent’s brain. This new initiative launched by the EU promises to deliver breakthroughs in information technology over the next 10 years by understanding how we (humans) compute in a very energy efficient manner.

Artificial intelligence systems based on neural networks have had quite a string of recent successes: One beat human masters at the game of Go, another made up beer reviews, and another made psychedelic art. But taking these supremely complex and power-hungry systems out into the real world and installing them in portable devices is no easy feat.

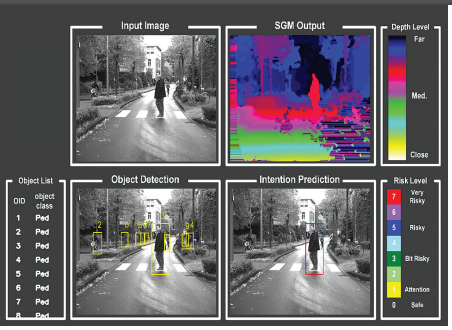

At a recent IEEE conference, a new set of low power chip prototypes were presented that are designed to run artificial neural networks that could, among other things, give smartphones a bit of a clue about what they are seeing and allow self-driving cars to predict pedestrians’ movements. Until now, neural networks—learning systems that operate analogously to networks of connected brain cells—have been much too energy intensive to run on the mobile devices that would most benefit from artificial intelligence, like smartphones, small robots, and drones. The mobile AI chips could also improve the intelligence of self-driving cars without draining their batteries or compromising their fuel economy. Don’t forget, our brains daily energy consumption is the equivalent of a 20 watt light bulb, which is highly efficient compared to your iPhone.

These chips are still in the prototype stage, but breakthroughs in power consumptions and brainwave connections could offer the greatest promise to our most vulnerable citizens – the disabled. Earlier this week, a paralyzed man used a chip implant in his brain to make his thoughts move his arm, marking an advance in a decades long effort to restore movement to people with spinal-cord injuries. Ian Burkhart (below), became a quadriplegic five years ago after a diving accident. Messages from his brain to move his limbs can’t get to other parts of the body due to damage to his spinal cord.

Mr. Burkhart sits in front of a computer that shows a virtual hand demonstrating a movement. He then imagines making the movement. The brain signals are transmitted and decoded, and then electrical stimulation is delivered to the muscles using a sleeve embedded with electrodes that wraps around his arm.

In an experiment, Mr. Burkhart performed routine tasks that involve very complex hand and finger movements, including grasping a bottle, pouring its contents into a jar, and picking up a stick and stirring the contents.

“The uniqueness is, for the first time, we link brain signals in a high-fidelity and reproducible fashion within milliseconds to an individual who can move his own hands,” said Chad E. Bouton, one of the authors of the article and vice president of advanced engineering and technology at the Feinstein Institute for Medical Research in Manhasset, NY, who was previously at Battelle.

The work comes at a time when the field of “brain-computer interfaces” is receiving an infusion of federal money and a push to create applications that aren’t just interesting research projects but could eventually have practical uses in patients.

Last year, researchers led by a team at the University of California, Los Angeles published a study showing five men with paralysis making step-like movements through electrical stimulation to the spinal cord. And BrainGate, a multi-institutional project that is developing and testing its own neural-implant system, has shown patients able to control a keyboard and move a robotic arm.

Ali Rezai, director of Ohio State’s Center for Neuromodulation and one of the Nature paper’s authors, said the system currently can be used only in the lab.

Mr. Burkhart has a transmitter on his head that has to be plugged in to work. Brain signals change depending on everything from the temperature in the room to what someone is focusing on, and the algorithm that decodes those signals has to adjust in real time. The ability of the electrodes to transmit clear signals can erode over time. Meantime, Mr. Burkhart eventually may need to have the brain implant removed, due in part to concern about infection.

“The goal is to eventually get this out of the lab and make it available beyond one or two research subjects,” Dr. Rezai said.

Mr. Bouton said researchers think not only about how to restore movement, but how to make it natural. Everyday actions, such as shaking someone’s hand, are actually a complex process, he said.

Scientists are trying to find ways to give patients sensory feedback, too.

“Touch is so important for this technology,” he said. “When you shake hands, you want to feel the hand and adjust your grip.”

For now, one of the major obstacles is the small market size. Spinal-cord injuries affect approximately 150,000 people in the U.S., and not all of them are eligible to use such a device, said Peter Konrad, professor of neurosurgery at Vanderbilt University and vice president of the North American Neuromodulation Society.

Researchers are also studying the use of such devices in people with other conditions, including stroke and ALS, or amyotrophic lateral sclerosis, although it isn’t clear whether extracting brain signals from such patients might differ.

Dr. Konrad said new technology may need innovative funding methods. The project in the Nature paper was funded primarily by the university, Battelle and private philanthropists. It has been challenging to find successful business models to commercialize brain-computer technology, even when experiments demonstrate the difference restoring function can make in people’s lives.

“How much does it mean for Ian Burkhart to be able to move his hand?” Dr. Konrad asked.

Mr. Burkhart lives with his father and stepmother near Columbus, Ohio, studies business management in college and helps coach the high-school lacrosse team for which he used to play. He said the contrast between what he did in the lab during the three-times-a-week training sessions and what he does at home is sharp.

“I would like to be able to do things on my own instead of asking for help or waiting for someone else to help me with it,” he said. “The things I do in the lab, I would love to have in everyday life.”

Burkhart is only one example of the convergence of AI and robotics, as neurorobotics unlocks our cerebral cortex’s deepest secrets.