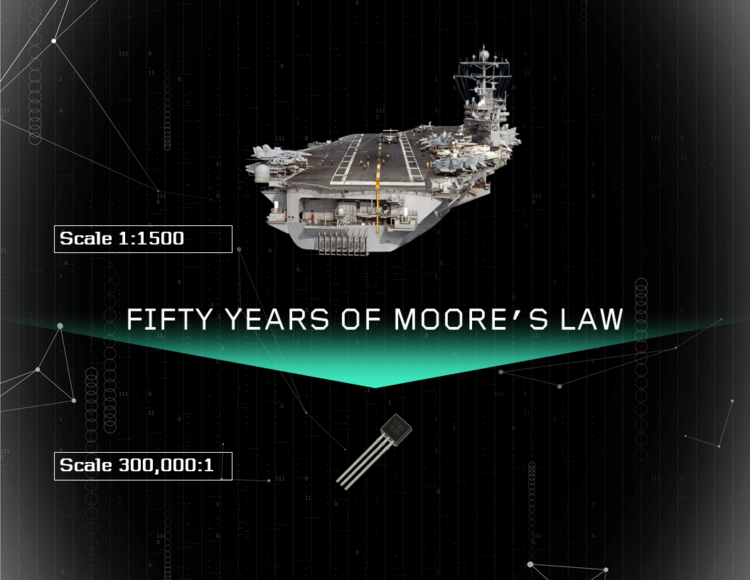

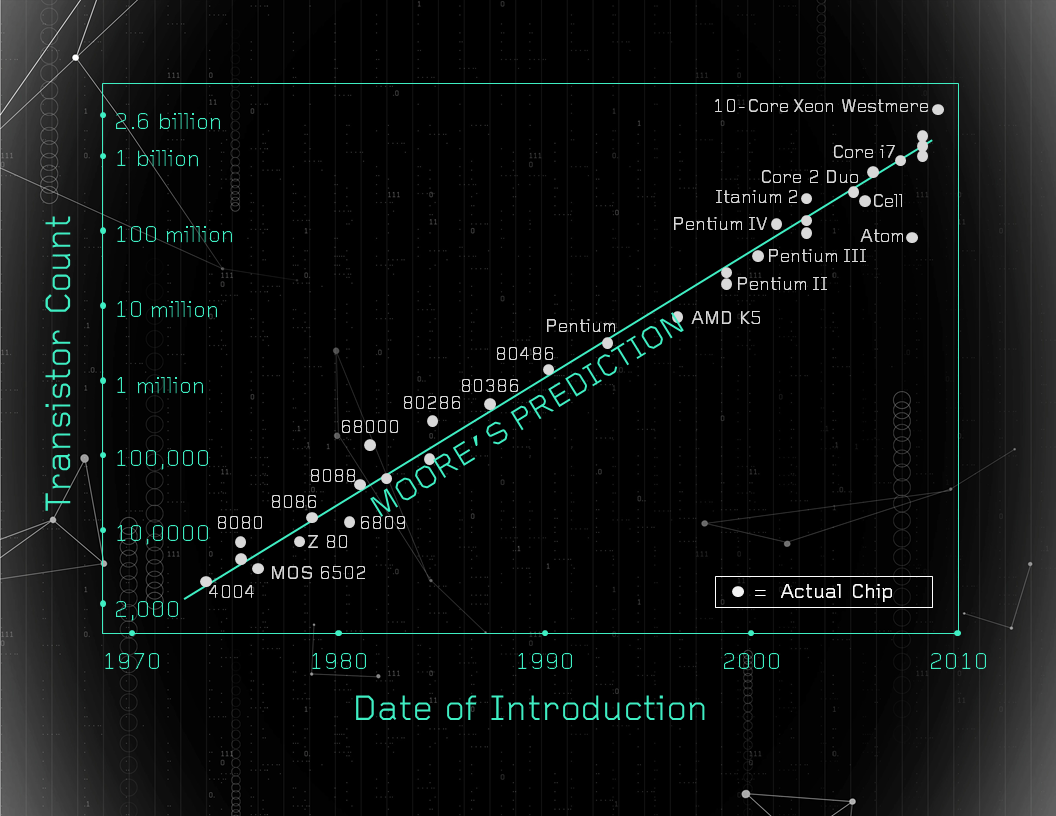

Moore’s Law is the prediction that the number of transistors on a microprocessor (computer chip) will double every two years, thereby roughly doubling performance. This “law,” which has held steady for the last 50 years, might now be endangered.

People have been saying Moore’s Law is dead for years, but thanks to some seriously impressive engineering at places like IBM and Intel, those people have been dead wrong. However, to keep up the pace of progress, by 2020 we’d need transistors so small (just a few nanometers in size) that the behavior of these devices would no longer be adequately described by classical physics. Their behavior would be governed instead by quantum uncertainty, rendering these devices hopelessly unreliable.

At the same time, a new breed of computation device is on the horizon—one which harnesses its immense power from those exact same quantum principles.

An expected journey

In 1965, Gordon Moore was asked by Electronics Magazine to predict what was going to happen in the semiconductor and electronics industries over the next decade. In just two and a half pages, he outlined with astonishing accuracy the entire evolution of electronics over the next 50 years. His paper, pragmatically titled “Cramming more components onto integrated circuits,” stated in its opening paragraph:

The future of integrated electronics is the future of electronics itself. The advantages of integration will bring about a proliferation of electronics, pushing this science into many new areas. Integrated circuits will lead to such wonders as home computers or terminals connected to central computers, automatic controls for automobiles, and personal portable communications equipment.

In one fell swoop, Moore predicted the rise of the personal computer, self-driving cars, the Internet and smartphones. This, however, is not the most famous or the most impressive of Moore’s predictions from that concise 1965 paper. That honor goes to his remarkably specific prediction about the rate at which semiconductor development would progress. He predicted that transistor counts would double every two years; therefore, that computer processing speed would also roughly double every two years.

From predictive to prescriptive

While “it’s hard to make predictions, especially about the future” (as Danish physicist Niles Bohr supposedly said), Gordon Moore did so with remarkable clarity. His prediction became so widely accepted that in 1991, the U.S. Semiconductor Industry Association began formally compiling industry roadmaps outlining exactly what progress would need to be made—across all levels of the semiconductor supply chain—in order to keep pace with Moore’s Law. In effect, this act of road-mapping changed the nature of Moore’s Law from a predictive observation to a prescriptive mandate. Impressively, the global semiconductor industry has hit their targets every year since the formal road-mapping began.

Moore’s Law on the brink of extinction?

Today, there’s a new group of people saying Moore’s Law is teetering on the brink of extinction. Unlike the prior naysayers, this cohort makes a different argument, one that doesn’t stem from a simple lack of confidence in the ability of engineers to pack tiny transistors into more and more dense configurations. This new group argues that transistors will soon become so small, they will approach anatomical physical limits for electron stability.

Currently, the most advanced chips have transistors just 14nm in diameter, smaller than a single virus and thinner than the membrane of a cell wall. For Moore’s Law to continue, transistors will need to shrink to less than 5nm in diameter by 2020. That’s less than 10 atoms across, or about as thin as the width of a DNA strand! At that scale, the laws of classical physics no longer suffice.

The behavior of these systems would be better described by the infamously uncertain properties of quantum mechanics, uncertainties which would render these transistors far too unreliable for practical use.

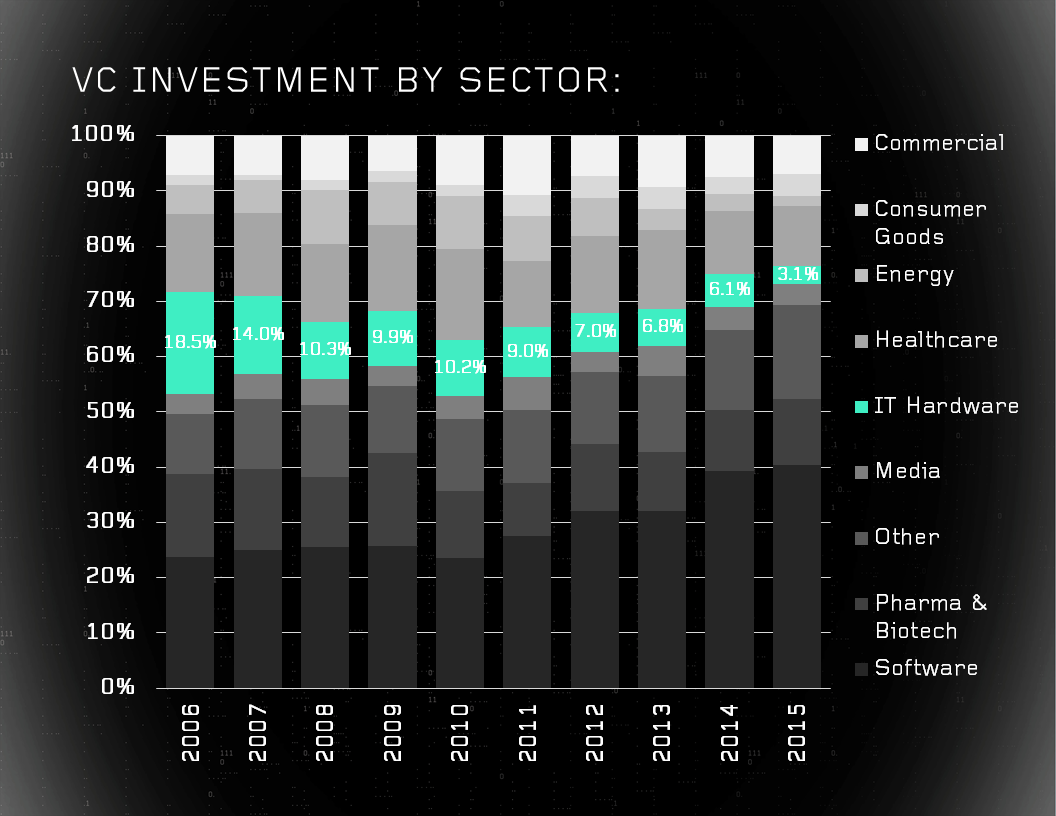

To the average venture capitalist, this is a troubling thought. The venture capital (VC) industry has piggybacked on the indefatigable progress of engineering fulfilling Moore’s Law for the last 50 years. The dynamism of our current wave of technological innovation is driven in no small part by these advances in information processing tools. The integrated circuit (aka chip) is the fundamental unit of hardware undergirding and enabling our modern digital, connected age.

Thanks to a consistent technological tailwind in the form of Moore’s Law, these tools have steadily been getting smaller, faster and cheaper for the last five decades. If this trend is about to reach a dead end, there will be serious implications.

To an optimistic venture capitalist like me, this represents an exciting opportunity.

Changing the shape of progress

To be clear, I am not hailing the end of progress for the semiconductor chip industry. However, I believe the nature of this industry’s progress is going to evolve. While the chips themselves won’t get much faster (i.e., clock speeds—the number of calculations a single processor makes per second—will stay relatively flat), they will improve in other ways. In particular, we will continue to make advances in parallelization. Instead of extracting more performance out of a single processor, we will get even better at putting multiple processors (or “cores”) in one device, and at breaking up workloads so that each core can work on different parts of a given problem simultaneously. This is especially useful for multitasking (i.e., running multiple applications at the same time), and more generally for any computationally intensive task that can be broken up into discrete chunks.

To borrow an analogy from computer scientist Daniel Reed, while the Boeing 707 (introduced in 1957) and the Boeing Dreamliner (introduced in 2011) travel at the same speed, they are very different aircraft.

One small step-function

The job of a venture capitalist is to identify and invest in opportunities with immense potential—ours is distinctly a mission of value creation (as opposed to value capture). We foster innovation that occurs in leaps and bounds, with progress that looks more like a step-function graph than a straight line.

In venture capital, there’s a category of investments we colloquially like to call “moonshots.” These are investments in bold, innovative, audacious ideas with massive potential but correspondingly high risk. The first moonshot was probably when Arthur Rock—who many consider the progenitor of the modern VC industry—convinced a group including Gordon Moore, Bob Noyce and six others (nicknamed the “traitorous eight”) to leave the Shockley Semiconductor Laboratory in 1957 and co-found Fairchild Semiconductor. Fairchild became the epicenter of progress for Silicon Valley’s nascent semiconductor industry, and therefore the epicenter of an electronics revolution.

A few years later, Fairchild was thriving and Gordon Moore and Bob Noyce co-founded a new startup called Intel. They were once again backed by venture capitalist Arthur Rock.

At the same time, Rock was growing his VC firm Rock & Co. and hired Dick Kramlich as his first associate. The pair went on to invest in numerous successful moonshots, including Apple when it was just a two-person team operating out of a garage in northern California.

Fun fact: Dick Kramlich later founded NEA, which is one of the world’s largest VC firms (and the firm where I work).

Those early VC moonshots catalyzed the hardware revolution that enabled the modern computing and digital communications age. As a result, we live in a time of unprecedented technological innovation and dynamism.

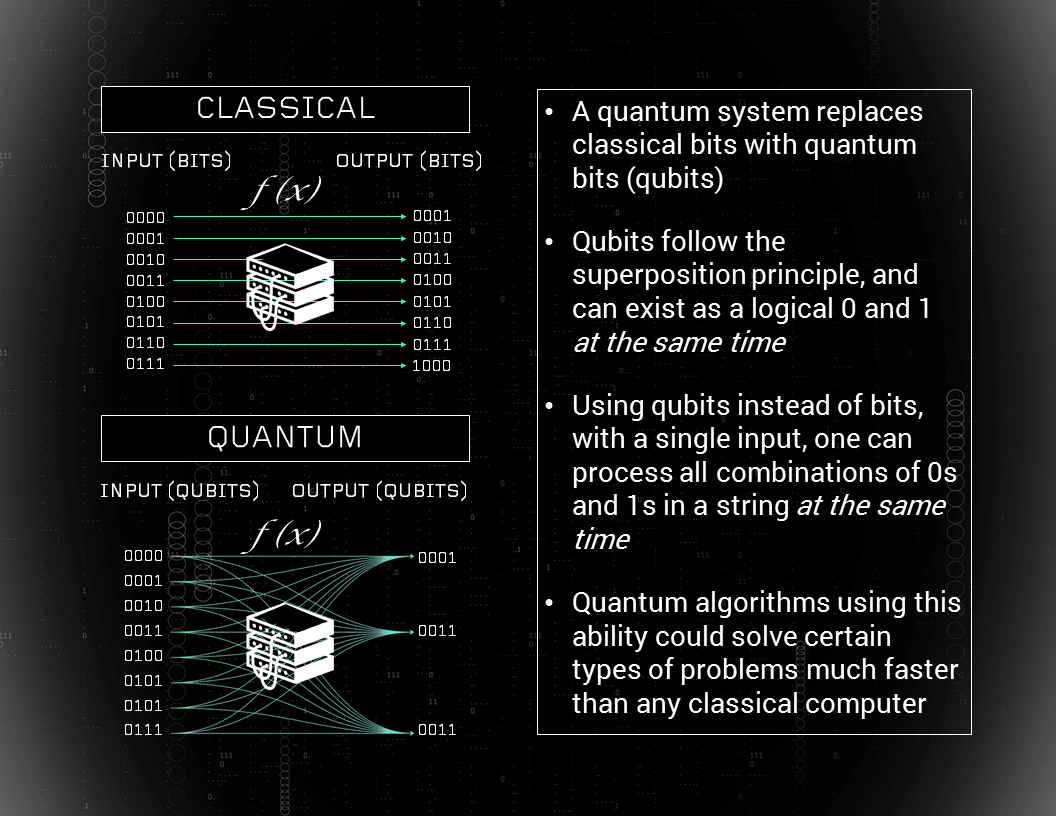

An investment in quantum computing could be one of VC’s next great moonshots. In short, a quantum computer is a computer that makes use of quantum states to store and process information. By exploiting certain quantum effects—in particular the phenomena of superposition and entanglement—quantum computers might be able to process complex information with almost unfathomable scale and speed.

However, hardware investing has largely fallen out of favor among the VC community, primarily due to its capital intensity. As a result, not many venture capitalists are paying attention to the nascent field of quantum computing.

The possibilities are n-bits

Quantum computing is a quintessential VC moon shot: it’s a proposition with immense potential for value creation, and a high risk of failure.

The promise of quantum computing is that it will render solvable entire classes of computational problems that are currently too complex to solve on classical hardware—problems such as integer factorization, simulation of complex systems, discrete optimization, unstructured searches of large parameter spaces, and many other use cases yet to be imagined. Quantum computers will not replace desktop machines. Their usefulness is not that they are simply faster counterparts to our classical computers; it is that they will enable solutions to entirely different challenges.

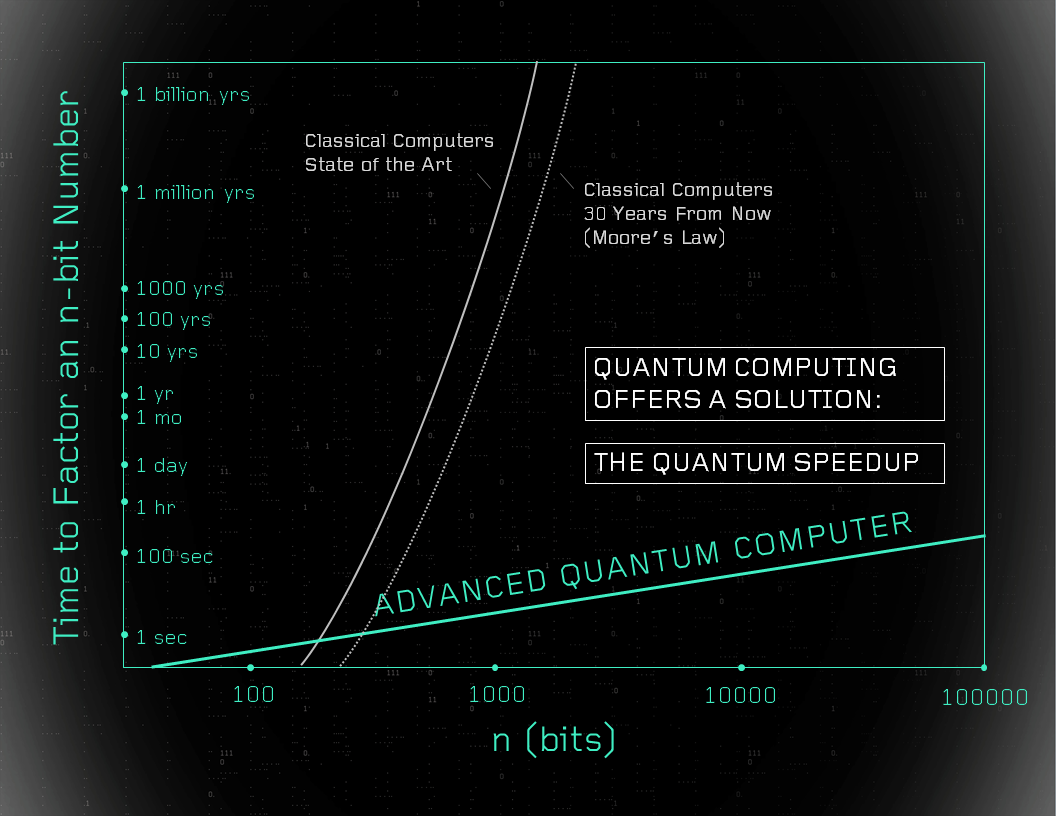

Perhaps the most famous application for a quantum computer is integer factorization. At first glance, integer factorization does not sound terribly interesting. In fact, it’s quite important: the foundation of modern cryptography (often referred to as public key or RSA encryption) is based on the inability of classical computers to factorize large integers.

In essence, modern cryptography encodes information using very large integers. Because classical computers can’t break down those numbers, they can’t break the cypher. However, a quantum computer would hypothetically be capable of breaking down, or factorizing, these cyphers, therefore breaking modern encryption.

While codebreaking is a provocative potential application for quantum computers, and one that government agencies around the world are paying close attention to (in particular the U.S. and China), it isn’t the most likely to be implemented in the near future due to its computational complexity. There are numerous other possible applications that could add immense value to society.

Simulation and optimization

Another particularly difficult class of problems is the simulation and optimization of complex systems (specifically, systems where complexity scales with 2^n). This is a very broad class of problems, but can be roughly conceptualized as a system where n-number of multifaceted things all interact with one another, producing many possible outcomes and variants.

Example 1: catalyst design

A tangible example of a problem in this category is the simulation of molecular interactions. Modeling how complex compounds interact at a molecular level is notoriously difficult to do on classical computers, but should be achievable with the use of quantum computers. This could herald breakthroughs in materials science, biology, chemistry, medicine and a host of other fields.

Simulating complex molecular interactions will not only enhance our understanding of existing compounds, but will enable us to analyze hypothetical compounds in a safe, simulated environment, unlocking new frontiers by exponentially increasing the scope of what can be modeled and therefore tested and discovered. This type of simulation could be particularly useful for catalyst design. Some of industry’s most important processes rely on catalyst-enhanced reactions. One example is the Haber-Bosch process, which is at the center of virtually all modern fertilizer production. Unfortunately, this chemical reaction process is extremely energy and resource consumptive. If a new, more efficient catalyst could be designed, massive amounts of energy and resources could be conserved, thereby increasing the efficiency and productivity of the global food supply.

Example 2: global warming

Molecular simulation could also help tackle the challenge of greenhouse gas emissions. If a compound could be designed to promote carbon dioxide fixation, for example, point source CO2 emissions could be curtailed. In more general terms, a quantum computer could analyze lots of different substances and see how they would react with various greenhouse gasses. Hypothetical substances could be continuously simulated until a particularly useful compound is identified—in this case, one that would trap and sequester the given greenhouse gas. This compound could then be used at greenhouse gas point source to curtail emissions.

Example 3: artificial intelligence

Quantum computing could also enable significant breakthroughs in artificial intelligence (AI) and machine learning. By simultaneously processing all combinations of inputs in a massively parallel fashion, and by taking advantage of a property dubbed “quantum tunneling,” quantum computing can tackle extraordinarily complex data challenges (such as discrete optimization of large datasets and complex topologies) that would otherwise be intractable on classical hardware (which, today, forces practitioners to rely on imperfect heuristic techniques).

The potential applications for quantum computers in the field of artificial intelligence are so compelling that NASA has a research team dedicated specifically to quantum AI. The team is aimed at demonstrating that quantum computing and quantum algorithms may someday dramatically improve the ability of computers to solve difficult optimization and machine learning problems.

Fun fact: there is even an argument that human intelligence arises from quantum computational processes in the brain, a theory dubbed “quantum mind.”

So, is it finally time to put chips on the table?

The quantum computing field is still in its adolescence. At the California Institute of Technology’s recent Quantum Summit, Dave Wineland (an American Nobel laureate physicist) told me that the various hardware approaches in the quantum computing field are like runners in a marathon: there might be some approaches taking the lead, but we’re so early in the race that each competitor can look over their shoulder and still see the starting line.

But, he also said that he believes we will have a scientific breakthrough enabled by quantum computing within the next 10 years.

In his view, a seminal event in the field will be the moment when quantum hardware enables us to discover something new. It could be simulation; it could be new science. This does not hold as a prerequisite the development of a powerful, general-purpose quantum computer. We may be able to accomplish this objective with a modestly sized, special-purpose machine and some high-quality peripherals (aka “control electronics”).

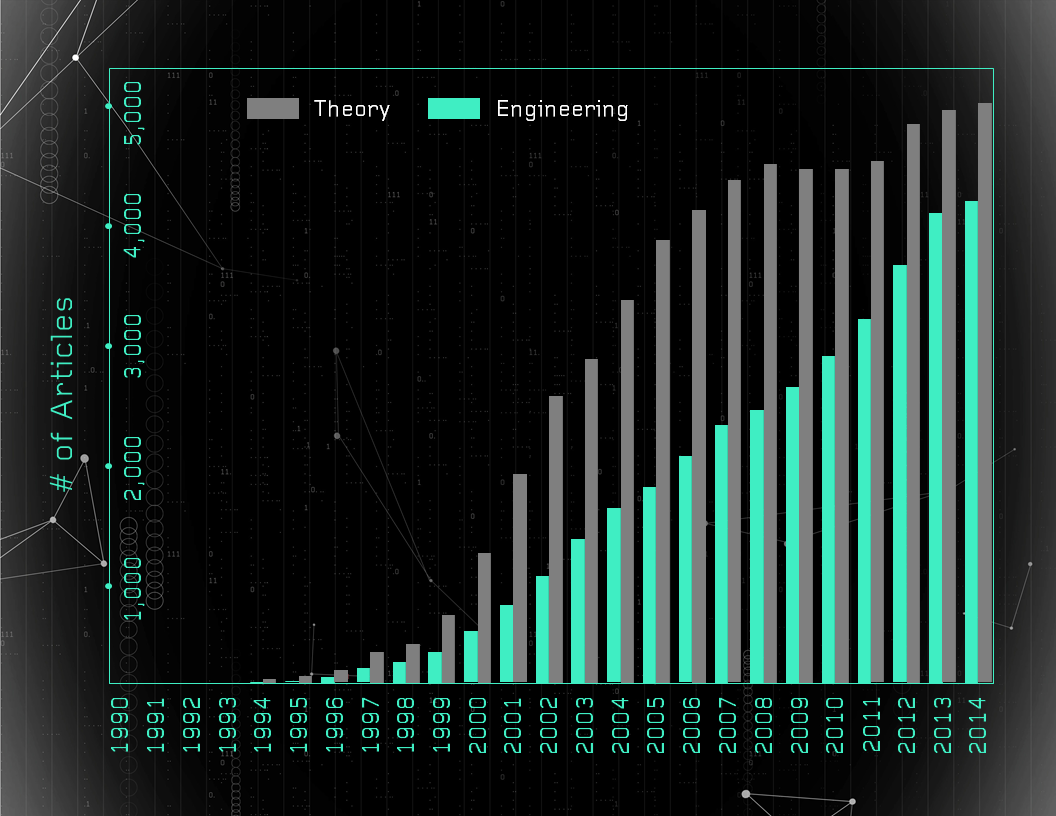

The field of quantum computing is undergoing a transition, from a discipline dominated by theory toward one that is increasingly practical. Put another way, quantum computing is moving from the realm of science toward the realm of engineering. This transition can be captured fairly literally by looking at the distribution of theoretical versus engineering academic papers published in the field.

From my vantage point as a venture capitalist, this is an exciting transition. We are moving closer to designing, engineering and constructing a functional device, and therefore moving closer to practical applications. And that’s good news for business, government and society.

Should venture capitalists double down?

Finally, I’d like to answer the question of whether the quantum computing field is ripe for VC investment. My view is that for the average VC firm, the answer is still no. The capital intensity, long timeline, and risks associated with an investment in quantum computing are not appropriate for an average-sized VC firm with typical fund dynamics. However, for VC firms that meet a certain set of characteristics, investing in quantum computing could be an interesting opportunity as part of a thoughtfully constructed portfolio. I believe those key characteristics are as follows:

- Technical Aptitude – The investor should understand the nuances of the technical approach they’re backing and that technology’s key strengths and weaknesses, both in an absolute sense and relative to other approaches. The investor should have a thesis as to the technical milestones that need to be achieved prior to investing.

- Deep Capital Base – A quantum computing venture is likely to require a large amount of capital, and it’s impossible to predict just how much. The investor should have relatively deep pockets in order to commit to an indeterminate investment size, while avoiding putting too much of their capital in one basket.

- Risk Tolerance – The investor must be able to withstand the potential loss of their entire investment. If the technology does not work, or if a competitor’s technology approach is found to be superior, there may be little to no salvageable value.

- Patient Capital – The investor must be willing to accept an indefinitely long timeline of potentially 10 years or more.

- Experience Across Cycles – The long timeline means a given quantum computing venture will probably need to survive one or more economic cycles.

- Regulatory Aptitude – As regulators and government agencies increase their focus on this field, investor familiarity with regulatory landscapes will be extremely helpful.

- Realistic Expectations – It won’t work overnight, and it’s difficult to predict if it will work at all.

A moon shot can go awry. But venture capitalists make moon shot investments when they are convinced that the potential rewards outweigh the risks. The question is not whether investments will be made in quantum computing. Multiple governments around the world, and multiple government agencies domestically, are already directing funds and technical efforts toward this challenge. Several private investments have already been made in the field. The question is whether the rewards will be worth the financial risk, and the wait.

An investment in quantum computing requires multiple characteristics not always found together in the same investor. But, for investors who are able to lean in with their eyes wide open and fully understand the bet, an investment in quantum computing could be a moon shot worth taking.

This piece was coauthored by Harry Weller. Harry leads NEA’s east coast venture practice and is a founder of NEA’s China organization. He is recognized as one of America’s top venture capitalists with honors including the Forbes “Midas List,” where he is among six investors named to the list since inception, Washingtonian’s “Titans of Technology,” Business Insider’s “Top 5 East Coast VCs” and Washington Business Journal’s “Outstanding Board Director Award” and “Power 100 Business Leaders.” Harry is an Expert in Residence at Harvard University.