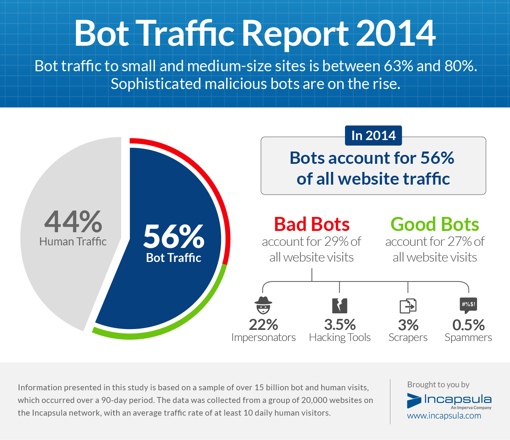

We may still be years away from humanoid robots outnumbering us humans here on Earth, but online, the balance has already tipped in the bots’ favor. According to a new report from web security firm Incapsula, 56 percent of all traffic on the Internet in 2014 came from automated, scripted bots. For smaller sites, visits by bots make up a whopping 80 percent of all traffic.

Bots, also known as web robots, are automated software scripts that perform tasks on the Internet. We often talk about them in a negative context – bots are responsible for filling inboxes and comment sections with spam, infecting computers with malware and performing so-called denial of service attacks. But bots are often used for good, too. Google uses bots to index sites for its search engine, for example. You may have even used a bot yourself to create an RSS feed or to snipe an eBay auction.

Unfortunately, it seems like the bad bots currently outnumber the good. The same study finds that 29 percent of all web traffic is coming from malicious bots. (Good bots, by comparison, made up 27 percent.) The vast majority of these bad bots are so-called impersonators that pretend to be human. They’re so good at what they do that Google is scrapping traditional CAPTCHAs – the best bots are able to solve 99.8 percent of these distorted text images.

Bot traffic is slightly down from 2013, when the automated scripts made up 60 percent of all web traffic. That’s not necessarily good news, though; Incapsula says it’s as a result of fewer people using good bots to power RSS feeds. Traffic from bad impersonator bots, meanwhile, is on the rise.

You can view the full results of the Incapsula 2014 Bot Traffic Report by visiting the company’s blog.

This article was written by Fox Van Allen and originally appeared on Techlicious.

[Infographic via Incapsula]